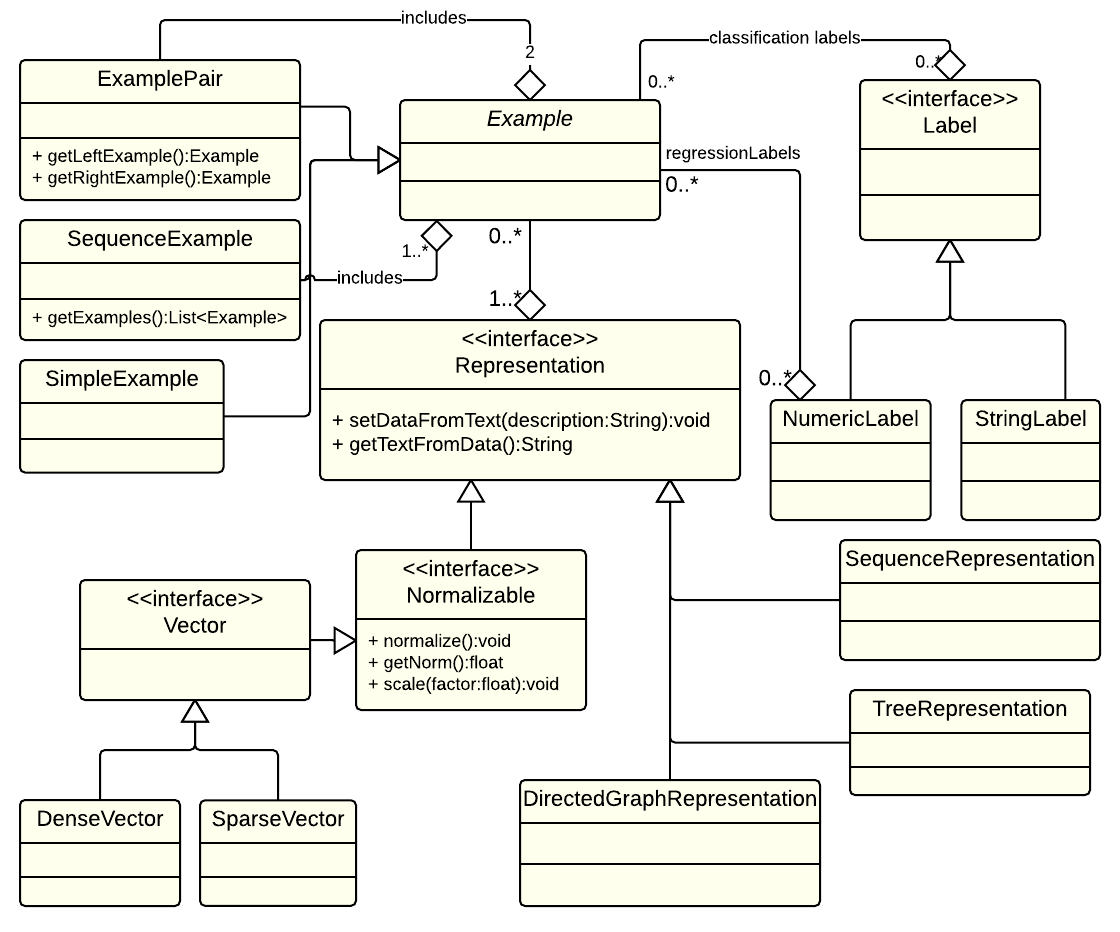

In KeLP, a machine learning instance is modeled by the class Example. As illustrated in the Figure below, there are three implementations of such class:

- SimpleExample: it models an individual machine learning instance. It is suitable for most of the scenarios.

- ExamplePair: it models machine learning instances naturally structured into pairs, such as question-answer in Question Answering or text-hypothesis in Recognizing Textual Entailment.

- SequenceExample: it models a sequence of Examples, each containing a set of Representations and a set of Labels. This object is used by machine learning methods operating over sequences.

Every example has a set of StringLabels for classification tasks and a set of NumericLabels for regression problems. Multiple labels associated to a single example allow for tackling multi-label classification or multi-variate regression tasks. An example can have no label, as in the case of clustering problems.

Furthermore, an example is composed by a set of representations. This allows to model data instances from different viewpoints, and to perform a joint learning model, where multiple representations are exploited at the same time (i.e., using kernel combinations).

Existing Representations

KeLP supports both vectorial and structured data to model learning instances.

For example, SparseVector can host a Bag-of-Words model, while DenseVector can represent data derived from low dimensional embeddings. They are both available into kelp-core, allowing to have the standard environment provided by most of the existing machine learning platforms.

kelp-additional-kernels provides structure representations:

- TreeRepresentation: it models a tree structure that can be employed for representing syntactic trees.

- SequenceRepresentation: it models a sequence that can be employed for representing, for example, sequences of words.

- DirectedGraphRepresentation: it models a directed unweighted graph structure, i.e., any set of nodes and directed edges connecting them. Edges do not have any weight or label.

The nodes in these three representations are associated with a generic structure StructureElement containing the node label and possible additional information. For example, in a TreeRepresentation of a sentence, leaves can represent lexical items. Nodes can thus model lexical items, where the node label represent the word, and the additional information can be constituted by a vector representation of that word, as the ones produced by Word Embedding (e.g., Word2Vec) methods.

Finally, a StringRepresentation is included to store a plain text, useful for associating a comment to an example.

To generate input data structures for KeLP please refer to this page.